Dear All,

I have a very long list of values as follow

(43, 560)

(516, 1533)

(1316, 3047)

(520, 1528)

(3563, 1316)

(45, 557)

(3562, 1312)

(2686, 1964)

(2424, 3340)

(3559, 1317)

(50, 561)

(2427, 3336)

(1313, 3046)

(3562, 1313)

(3559, 1318)

(2689, 1962)

(2429, 3339)

(3721, 2585)

(1317, 3048)I would like to group values within a certain tolerance.

Say I set tolerance to 3, my 1st and 6th results ((43, 560) (45, 557)) should be grouped together in an average value: (44, 558.5).

What approach would be the best?

I really don't know where to start from. The only idea I got was the following.

I take each tuple of the initial list and add/subtract values from 1 to 3 (my tolerance), so the entry (43,560) will result in: (43,560),(42,559),(41,558),(40,557),(44,561),(45,562),(46,563).

I repeat this for all the entries of my initial list and take the values with most occurrences... but I am afraid this will just increase my list instead of reducing it and I am not sure I would get correct results.

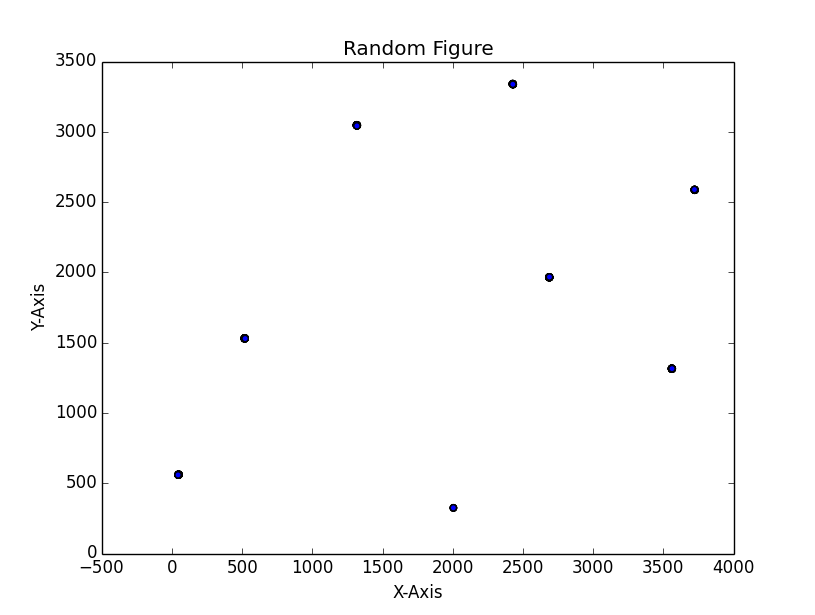

As an addition, I tried to plot the values and got the following image

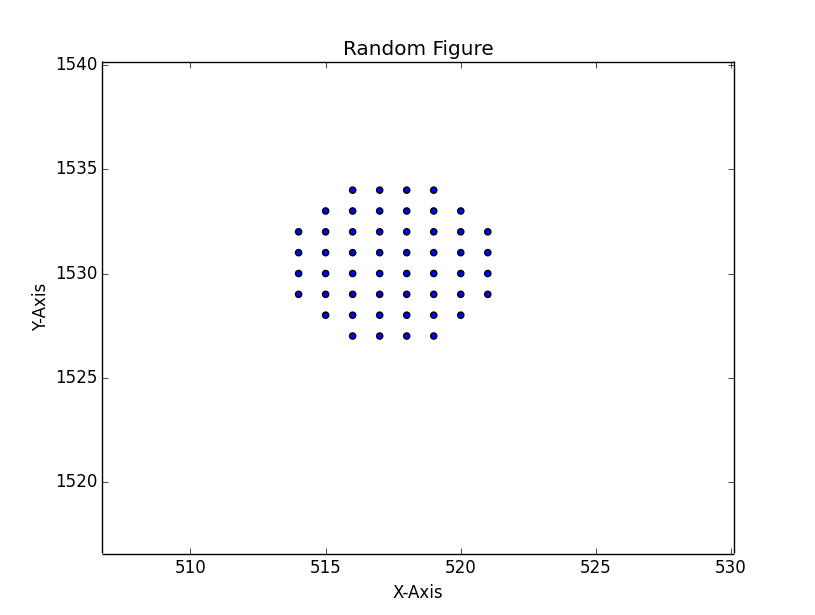

but if I zoom in each cluster, I see something like this

I would like each cluster to become one single point (so in the end I have 8 x,y coordinates only).

Any help is much appreciated!

giancan