Retrieval augmented generation (RAG) allows large language models (LLMs) to answer queries related to the data the models have not seen during training. In my previous article, I explained how to develop RAG systems using the Claude 3.5 Sonnet model.

However, RAG systems only answer queries about the data stored in the vector database. For example, you have a RAG system that answers queries related to financial documents in your database. If you ask it to search the internet for some information, it will not be able to do so.

This is where tools and agents come into play. Tools and agents enable LLMs to retrieve information from various external sources such as the internet, Wikipedia, YouTube, or virtually any Python method implemented as a tool in LangChain.

This article will show you how to enhance the functionalities of your RAG systems using tools and agents in the Python LangChain framework.

So, let's begin without an ado.

Installing and Importing Required Libraries

The following script installs the required libraries, including the Python LangChain framework and its associated modules and the OpenAI client.

!pip install -U langchain

!pip install langchain-core

!pip install langchainhub

!pip install -qU langchain-openai

!pip install pypdf

!pip install faiss-cpu

!pip install --upgrade --quiet wikipedia

Requirement already satisfied: langchain in c:\us

The script below imports the required libraries into your Python application.

from langchain.tools import WikipediaQueryRun

from langchain_community.utilities import WikipediaAPIWrapper

from langchain.tools.retriever import create_retriever_tool

from langchain.agents import AgentExecutor, create_tool_calling_agent

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain_community.chat_message_histories import ChatMessageHistory

from langchain_core.runnables.history import RunnableWithMessageHistory

from langchain_core.tools import tool

from langchain import hub

import os

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.vectorstores import FAISS

Enhancing RAG with Tools and Agents

To enhance RAG using tools and agents in LangChain, follow the following steps.

- Import or create tools you want to use with RAG.

- Create a retrieval tool for RAG.

- Add retrieval and other tools to an agent.

- Create an agent executor that invokes agents' calls.

The benefit of agents over chains is that agents decide at runtime which tool to use to answer user queries.

This article will enhance the RAG model's performance using the Wikipedia tool. We will create a LangChain agent with a RAG tool capable of answering questions from a document containing information about the British parliamentary system. We will incorporate the Wikipedia tool into the agent to enhance its functionality.

If a user asks a question about the British parliament, the agent will call the RAG tool to answer it. In case of any other query, the agent will use the Wikipedia tool to search for answers on Wikipedia.

Let's implement this model step by step.

Importing Wikipedia Tool

The following script imports the built-in Wikipedia tool from the LangChain module. To retrieve Wikipedia pages, pass your query to the run() method.

wikipedia_tool = WikipediaQueryRun(api_wrapper=WikipediaAPIWrapper())

response = wikipedia_tool.run("What are large language models?")

print(response)

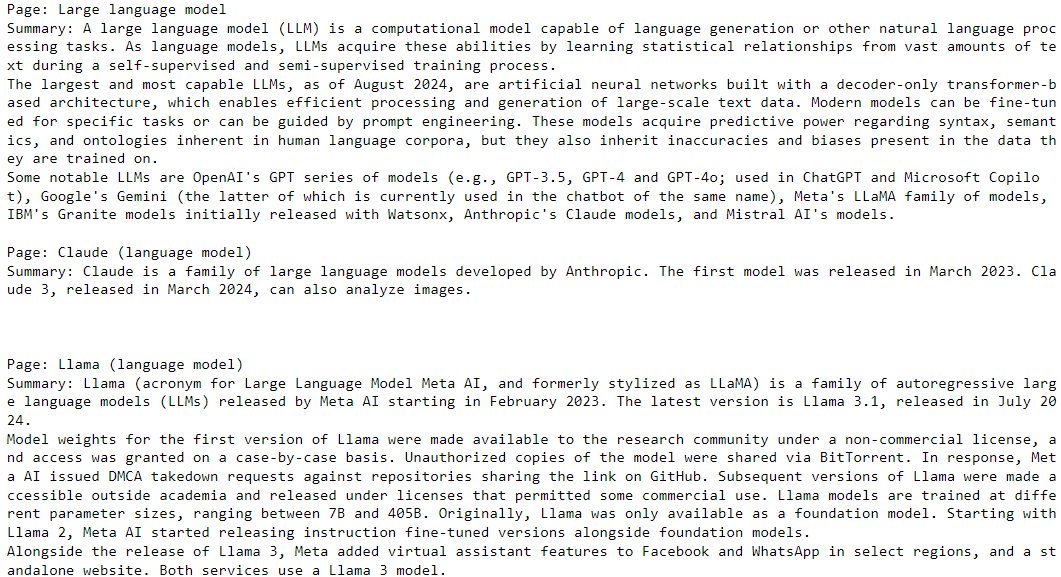

Output:

To add the above tool to an agent, you must define a function using the @tool decorator. Inside the method, you simply call the run() method as you previously did and return the response.

@tool

def WikipediaSearch(search_term: str):

"""

Use this tool to search for wikipedia articles.

If a user asks to search the internet, you can search via this wikipedia tool.

"""

result = wikipedia_tool.run(search_term)

return result

Next, we will create a retrieval tool that implements the RAG functionality.

Creating Retrieval Tool

In my article on Retrieval Augmented Generation with Claude 3.5 Sonnet, I explained how to create retriever using LangChain. The process remains the same here.

openai_api_key = os.getenv('OPENAI_API_KEY')

loader = PyPDFLoader("https://web.archive.org/web/20170809122528id_/http://global-settlement.org/pub/The%20English%20Constitution%20-%20by%20Walter%20Bagehot.pdf")

docs = loader.load_and_split()

documents = RecursiveCharacterTextSplitter(

chunk_size=1000, chunk_overlap=200

).split_documents(docs)

embeddings = OpenAIEmbeddings(openai_api_key = openai_api_key)

vector = FAISS.from_documents(documents, embeddings)

retriever = vector.as_retriever()

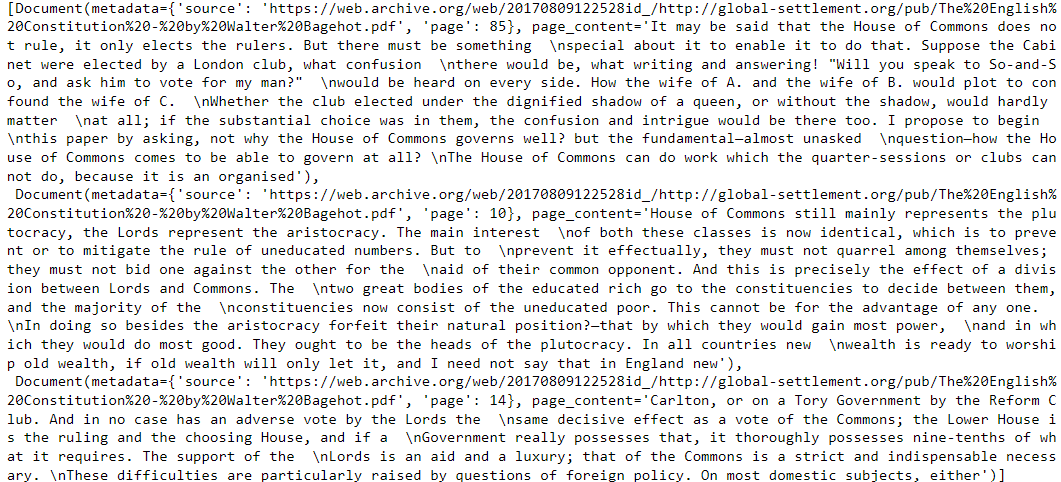

You can query the vector retriever using the invoke() method. In the output, you will see the section of documents having the highest semantic similarity with the input.

query = """

"What is the difference between the house of lords and house of commons? How members are elected for both?"

"""

retriever.invoke(query)[:3]

Output:

Next, we will create a retrieval tool that uses the vector retriever you created to answer user queries. You can create a RAG retrieval tool using the create_retriever_tool() function.

BritishParliamentSearch = create_retriever_tool(

retriever,

"british_parliament_search",

"Use this tool tos earch for information about the british parliament, house of lords and house of common and any other related information.",

)

We have created a Wikipedia tool and a retriever (RAG) tool; the next step is adding these tools to an agent.

Creating Tool Calling Agent

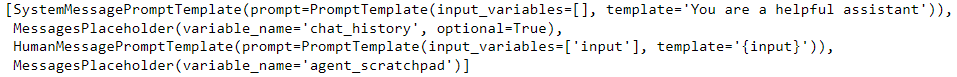

First, we will create a list containing all our tools. Next, we will define the prompt that we will use to call our agent. I used a built-in prompt from LangSmith, which you can see in the script's output below.

tools = [WikipediaSearch, BritishParliamentSearch]

# Get the prompt to use - you can modify this!

prompt = hub.pull("hwchase17/openai-functions-agent")

prompt.messages

Output:

You can use your prompt if you want.

We also need to define the LLM we will use with the agent. We will use the OpenAI GPT-4o in our script. You can use any other LLM from LangChain.

llm = ChatOpenAI(model="gpt-4o",

temperature=0,

api_key=openai_api_key,

)

Next, we will create a tool-calling agent that generates responses using the tools, LLM, and the prompt we just defined.

Finally, to execute an agent, we need to define our agent executor, which returns the agent's response to the user when invoked via the invoke() method.

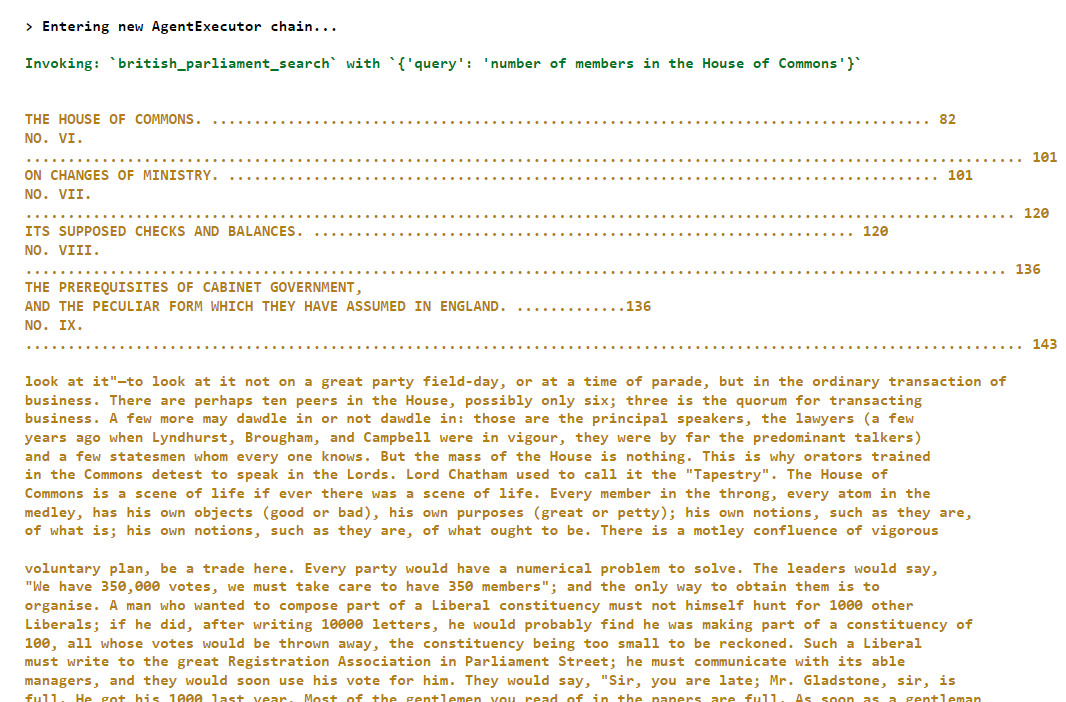

In the script below, we ask our agent a question about the British parliament.

agent = create_tool_calling_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose = True)

response = agent_executor.invoke({"input": "How many members are there in the House of Common?"})

print(response)

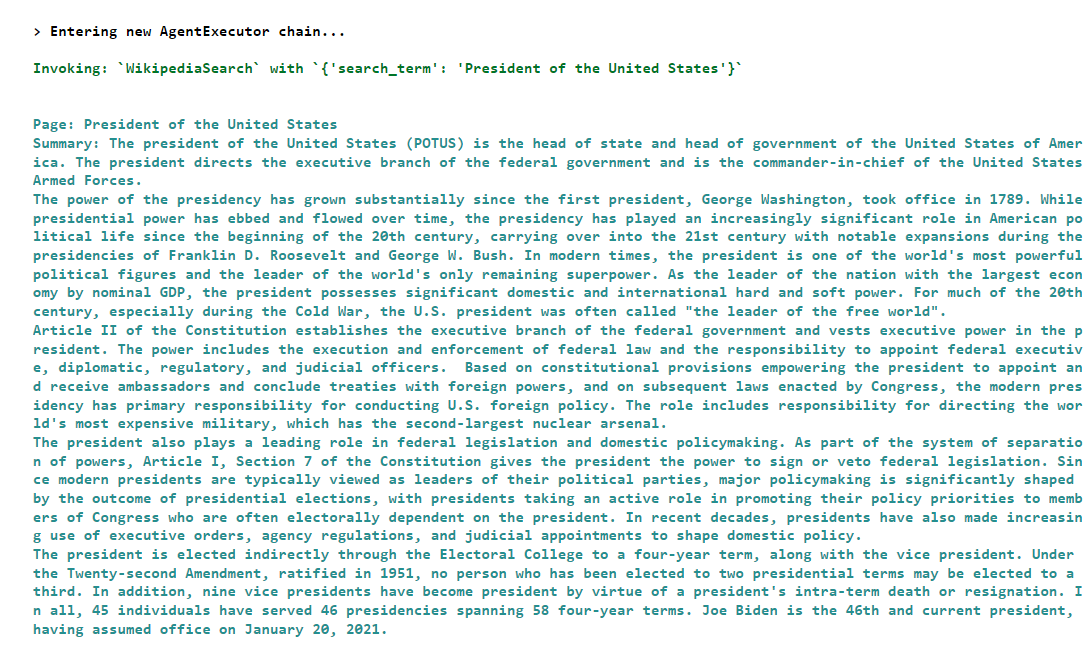

Output:

As you can see from the above output, the agent invoked the british_parliament_search tool to generate a response.

Let's ask another question about the President of the United States. Since this information is not available in the document that the RAG tool uses, the agent will call the WikipediaSearch tool to generate the response to this query.

response = agent_executor.invoke({"input": "Who is the current president of United States?"})

Output:

Finally, if you want only to return the response without any additional information, you can use the output key of the response as shown below:

print(response["output"])

Output:

The current President of the United States is Joe Biden. He is the 46th president and assumed office on January 20, 2021.

As a last step, I will show you how to add memory to your agents so that they remember previous conversations with the user.

Adding Memory to Agent Executor

We will first create an AgentExecutor object as we did previously, but this time, we will set verbose = False since we are not interested in seeing the agent's internal workings.

Next, we will create an object of the ChatMessageHistory() class to save past conversations.

Finally, we will create an object of the RunnableWithMessageHistory() class and pass to it the agent executor and message history objects. We also pass the keys for the user input and chat history.

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose = False)

message_history = ChatMessageHistory()

agent_with_chat_history = RunnableWithMessageHistory(

agent_executor,

# This is needed because in most real world scenarios, a session id is needed

# It isn't really used here because we are using a simple in memory ChatMessageHistory

lambda session_id: message_history,

input_messages_key="input",

history_messages_key="chat_history",

)

Next, we will define a function generate_response() that accepts a user query as a function parameter and invokes the RunnableWithMessageHistory() class object. In this case, you also need to pass the session ID, which points to the past conversation. You can have multiple session IDs if you want multiple conversations.

def generate_response(query):

response = agent_with_chat_history.invoke(

{"input": query},

config={"configurable": {"session_id": "<foo>"}}

)

return response

Let's test the generate_response() function by asking a question about the British parliament.

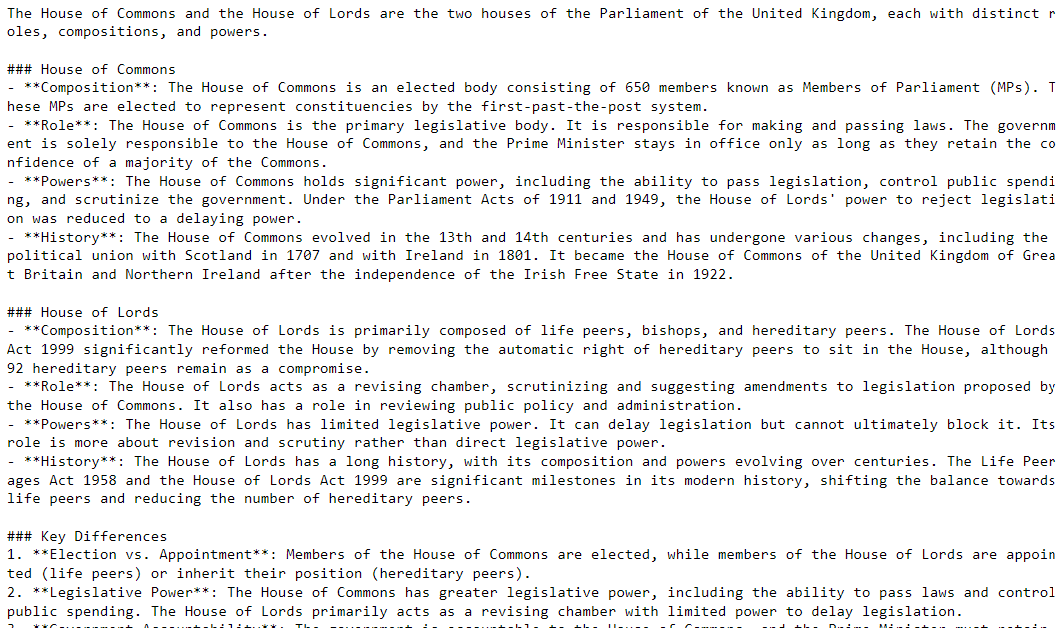

query = "What is the difference between the house of lords and the house of commons?"

response = generate_response(query)

print(response["output"])

Output:

Next, we will ask a question about the US president.

query = "Who is the current President of America?"

response = generate_response(query)

print(response["output"])

Output:

The current President of the United States is Joe Biden. He assumed office on January 20, 2021, and is the 46th president of the United States.

Next, we will only ask And for France but since the agent remembers the past conversation, it will figure out that the user wants to know about the current French President.

query = "And of France?"

response = generate_response(query)

print(response["output"])

Output:

The current President of France is Emmanuel Macron. He has been in office since May 14, 2017, and was re-elected for a second term in 2022.

Conclusion

Retrieval augmented generation allows you to answer questions using documents from a vector database. However, you may need to fetch information from external sources. This is where tools and agents come into play.

In this article, you saw how to enhance the functionalities of your RAG systems using tools and agents in LangChain. I encourage you to incorporate other tools and agents into your RAG systems to build amazing LLM products.