Hello,

I am programming an image application with a GUI.

I open a grayscale image like this:

String^ s = openFileDialog1->FileName;

s = s->Substring(s->LastIndexOf('\\') + 1, (s->Length - s->LastIndexOf('\\')) - 1);

InputImageFileName = s;

bmpInputImage = gcnew System::Drawing::Bitmap(openFileDialog1->FileName);then I create a new image

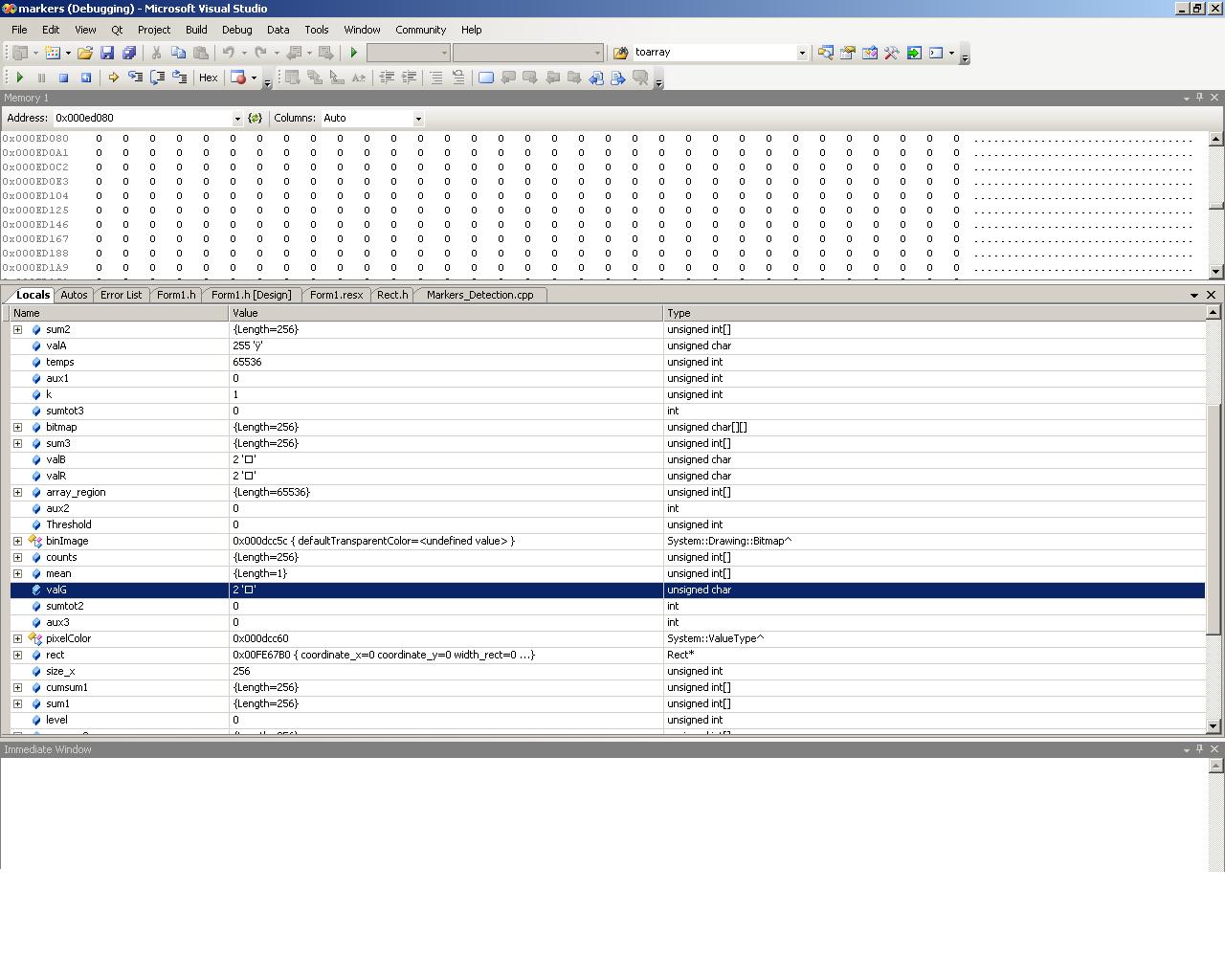

binaryImage=gcnew System::Drawing::Bitmap(bmpInputImage);and I want to work with this image as a unsigned char* so I can use each value of the image to make an histogram and more operations.

I have found that but in the case that it works,(I am not sure), I do not know how to use it:

System::Drawing::Image::Save(System::String^ filename, System::Drawing::Imaging::ImageFormat^ format)

//then I will apply format->ToString();Could anyone of you help me?

Thanks in advanced!!