On November 20, 2024, OpenAI updated its GPT-4o model, claiming it is more creative and accurate on several benchmarks.

In this article, I compare the GPT-4o November update with the previous version (August update) for text summarization and classification tasks.

By the end of this article, you will see whether the new update outperforms the previous one, particularly for text classification and summarization tasks.

So, let's begin without ado.

Importing and Installing Required Libraries

You must install the OpenAI Python library to access OpenAI models in Python. In addition, you need to install a few other libraries that will help you evaluate OpenAI models for text summarization and classification tasks.

!pip install openai

!pip install rouge-score

!pip install --upgrade openpyxl

!pip install pandas openpyxl

The following script imports the required libraries in our Python application.

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from itertools import combinations

from collections import Counter

from sklearn.metrics import hamming_loss, accuracy_score

from rouge_score import rouge_scorer

from openai import OpenAI

from google.colab import userdata

OPENAI_API_KEY = userdata.get('OPENAI_API_KEY')We will also define an OpenAI client object to call the OpenAI API.

client = OpenAI(api_key = OPENAI_API_KEY)Comparison for Text Summarization

Let's first see the results of text summarization. We will use the News Article Summary dataset you can download from Kaggle to summarize the articles.

# Kaggle dataset download link

# https://github.com/reddzzz/DataScience_FP/blob/main/dataset.xlsx

dataset = pd.read_excel(r"/content/dataset.xlsx")

print(dataset.shape)

dataset.head()

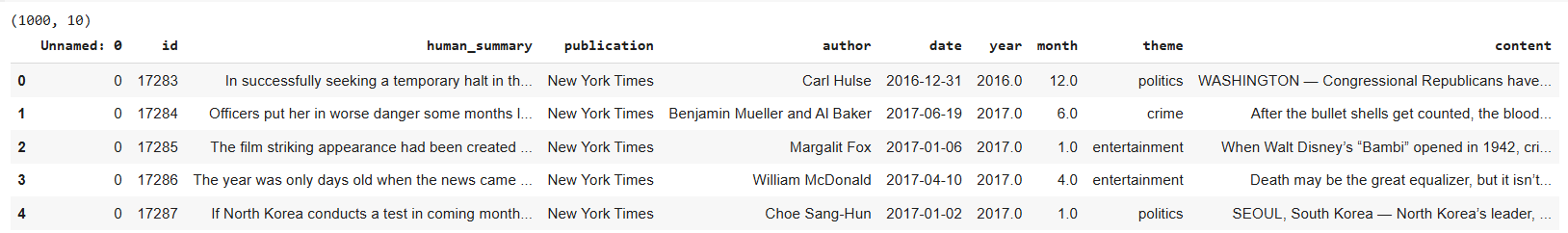

Output:

The dataset contains news articles and human summaries in the content and human_summary columns.

Next, we will define a helper function that calculates ROUGE scores given two text documents. ROUGE scores are a commonly used metric for machine translation and text summarization tasks.

# Function to calculate ROUGE scores

def calculate_rouge(reference, candidate):

scorer = rouge_scorer.RougeScorer(['rouge1', 'rouge2', 'rougeL'], use_stemmer=True)

scores = scorer.score(reference, candidate)

return {key: value.fmeasure for key, value in scores.items()}

We will define the summarize_articles_with_model() function, which takes the OpenAI model ID and generates summaries for the first 20 articles in the dataset. The function also calculates the ROUGE score for each summary and returns a list of ROUGE scores for the first 20 articles.

You can summarize more records, but that can cost more.

def summarize_articles_with_model(model_id):

results = []

for i, (_, row) in enumerate(dataset[:20].iterrows(), start=1):

article = row['content']

human_summary = row['human_summary']

print(f"Summarizing article {i}.")

prompt = f"Summarize the following article in 1150 characters. The summary should look like human created:\n\n{article}\n\nSummary:"

response = client.chat.completions.create(

model=model_id,

messages=[{"role": "user", "content": prompt}],

max_tokens=1150,

temperature=0

)

generated_summary = response.choices[0].message.content

rouge_scores = calculate_rouge(human_summary, generated_summary)

results.append({

'article_id': row.id,

'generated_summary': generated_summary,

'rouge1': rouge_scores['rouge1'],

'rouge2': rouge_scores['rouge2'],

'rougeL': rouge_scores['rougeL']

})

return results

Summarization Results with GPT-4o November Update

Let's first summarize the articles using the GPT-4o November update. The ID for this model is gpt-4o-2024-11-20. We pass the model ID to the summarize_articles_with_model() function, which returns a list of ROUGE scores for the first 20 articles. Next, we convert the list into a Pandas DataFrame and print the mean ROUGE scores.

results = summarize_articles_with_model("gpt-4o-2024-11-20")

results_df = pd.DataFrame(results)

mean_values = results_df[["rouge1", "rouge2", "rougeL"]].mean()

print(mean_values)

Output:

Summarizing article 20.

rouge1 0.341660

rouge2 0.069531

rougeL 0.150887

The above output shows the ROUGE scores. Higher ROUGE scores means a model is more accurate for text summarization.

Summarization Results with GPT-4o August Update

Next, we will summarize the articles using the GPT-4o August update.

results = summarize_articles_with_model("gpt-4o-2024-08-06")

results_df = pd.DataFrame(results)

mean_values = results_df[["rouge1", "rouge2", "rougeL"]].mean()

print(mean_values)

Output:

rouge1 0.363209

rouge2 0.086697

rougeL 0.170481

The above output shows that the GPT-4o August update performs better than the November update for all ROUGE scores. The older model wins here.

Next, we will compare GPT-4o November and August updates for text classification.

Comparison of Multi-Class Zero-Shot Text Classification

First, we will do multi-class classification using the US Airline Sentiment Dataset.

## Dataset download link

## https://www.kaggle.com/datasets/crowdflower/twitter-airline-sentiment?select=Tweets.csv

dataset = pd.read_csv(r"/content/Tweets.csv")

print(dataset.shape)

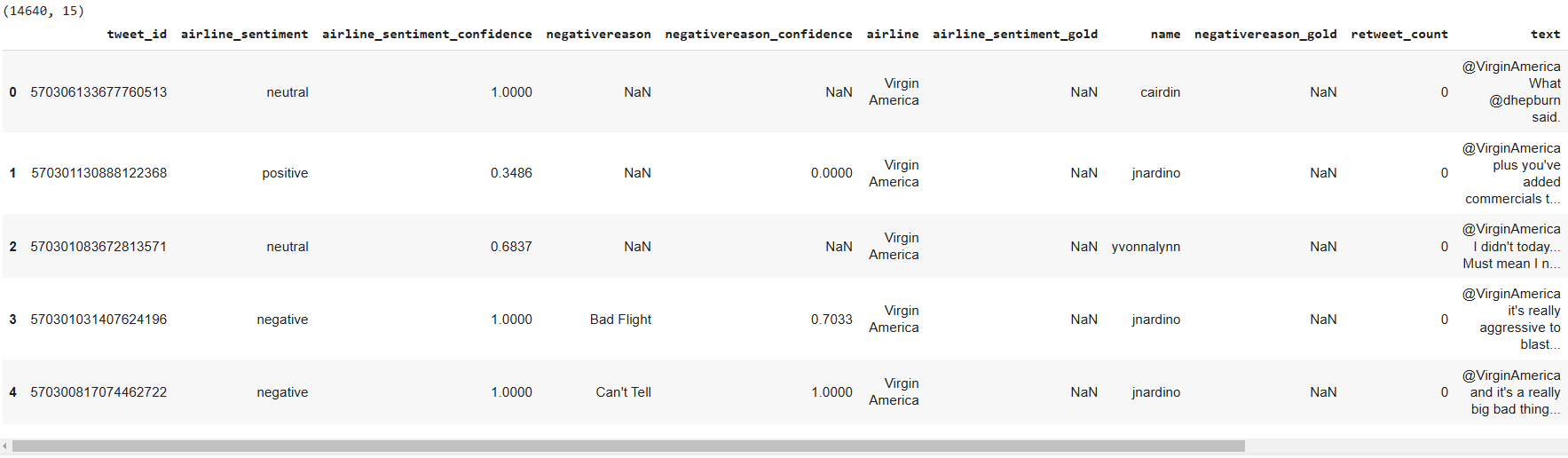

dataset.head()Output:

We will preprocess the dataset and create a new one containing 100 records, with 34 neutral and 33 tweets labeled positive and negative, respectively.

# Remove rows where 'airline_sentiment' or 'text' are NaN

dataset = dataset.dropna(subset=['airline_sentiment', 'text'])

# Remove rows where 'airline_sentiment' or 'text' are empty strings

dataset = dataset[(dataset['airline_sentiment'].str.strip() != '') & (dataset['text'].str.strip() != '')]

# Filter the DataFrame for each sentiment

neutral_df = dataset[dataset['airline_sentiment'] == 'neutral']

positive_df = dataset[dataset['airline_sentiment'] == 'positive']

negative_df = dataset[dataset['airline_sentiment'] == 'negative']

# Randomly sample records from each sentiment

neutral_sample = neutral_df.sample(n=34)

positive_sample = positive_df.sample(n=33)

negative_sample = negative_df.sample(n=33)

# Concatenate the samples into one DataFrame

dataset = pd.concat([neutral_sample, positive_sample, negative_sample])

# Reset index if needed

dataset.reset_index(drop=True, inplace=True)

# print value counts

print(dataset["airline_sentiment"].value_counts())

Output:

airline_sentiment

neutral 34

positive 33

negative 33

Name: count, dtype: int64Next, we define the find_sentiment() function, which takes the OpenAI client and the model ID as parameters and returns model accuracy for tweet sentiment classification.

def find_sentiment(client, model):

tweets_list = dataset["text"].tolist()

all_sentiments = []

i = 0

exceptions = 0

while i < len(tweets_list):

try:

tweet = tweets_list[i]

content = """What is the sentiment expressed in the following tweet about an airline?

Select sentiment value from positive, negative, or neutral. Return only the sentiment value in small letters.

tweet: {}""".format(tweet)

sentiment_value = client.chat.completions.create(

model= model,

temperature = 0,

max_tokens = 10,

messages=[

{"role": "user", "content": content}

]

).choices[0].message.content

all_sentiments.append(sentiment_value)

i = i + 1

print(i, sentiment_value)

except Exception as e:

print("===================")

print("Exception occurred:", e)

exceptions += 1

print("Total exception count:", exceptions)

accuracy = accuracy_score(all_sentiments, dataset["airline_sentiment"])

print("Accuracy:", accuracy)

Multi-Class Text Classification Results with GPT-4o November Update

Let's first see model accuracy for the GPT-4o November update.

model = "gpt-4o-2024-11-20"

find_sentiment(client, model)Output:

Total exception count: 0

Accuracy: 0.77

The output shows that the GPT-4o November update achieves a an accuracy of 77%.

Multi-Class Text Classification Results with GPT-4o August Update

The following script returns the accuracy of the GPT-4o August version for tweet sentiment classification.

model = "gpt-4o-2024-08-06"

find_sentiment(client, model)

Output:

Total exception count: 0

Accuracy: 0.73

The above output shows that the GPT-4o August update returns an accuracy of 73%, far less than the November update. So, the latest update wins here.

The following section will compare the GPT-4o November and August updates for Multi-label text classification.

Comparison for Multi-label Zero-Shot Text Classification

We will use the Research Papers Dataset from Kaggle for multi-label text classification.

The following script imports the dataset into your application and displays its header.

## dataset download link

## https://www.kaggle.com/datasets/shivanandmn/multilabel-classification-dataset?select=train.csv

dataset = pd.read_csv(r"/content/train.csv")

print(f"Dataset Shape: {dataset.shape}")

dataset.head()

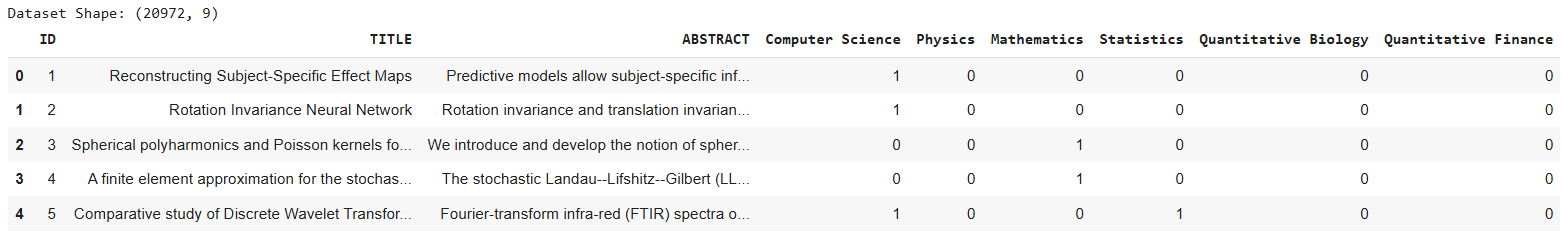

Output:

The dataset contains research paper titles and abstracts and the corresponding subject categories to which they may belong. A research paper can belong to multiple categories.

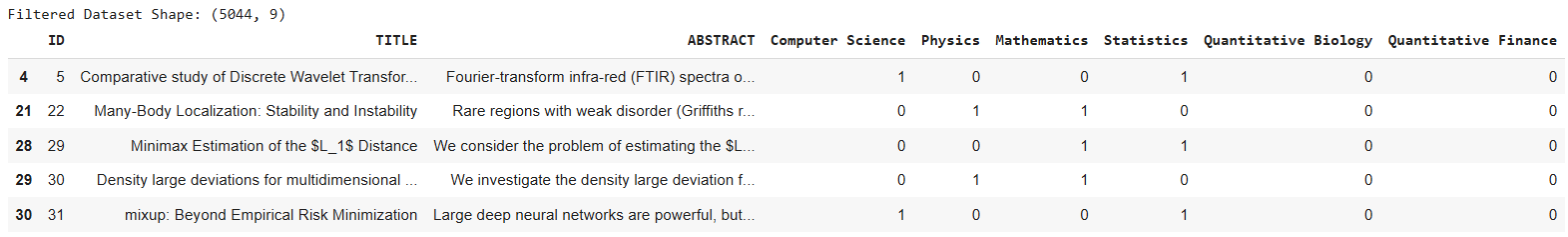

We will filter the dataset and retrieve records where a research paper belongs to at least two subject categories.

subjects = ["Computer Science", "Physics", "Mathematics", "Statistics", "Quantitative Biology", "Quantitative Finance"]

filtered_dataset = dataset[(dataset[subjects] == 1).sum(axis=1) >= 2]

print(f"Filtered Dataset Shape: {filtered_dataset.shape}")

filtered_dataset.head()

Output:

Subsequently, we will define the find_research_category() function, which accepts the OpenAI client, model ID, and dataset and predicts the research paper categories.

def find_research_category(client, model, dataset):

outputs = []

i = 0

for _, row in dataset.iterrows():

title = row['TITLE']

abstract = row['ABSTRACT']

content = """You are an expert in various scientific domains.

Given the following research paper title and abstract, classify the research paper into at least two or more of the following categories:

- Computer Science

- Physics

- Mathematics

- Statistics

- Quantitative Biology

- Quantitative Finance

Return only a comma-separated list of the categories (e.g., [Computer Science,Physics] or [Computer Science,Physics,Mathematics]).

Use the exact case sensitivity and spelling of the categories provided above.

text: Title: {}\nAbstract: {}""".format(title, abstract)

research_category = client.chat.completions.create(

model= model,

temperature = 0,

max_tokens = 100,

messages=[

{"role": "user", "content": content}

]

).choices[0].message.content

outputs.append(research_category)

print(i + 1, research_category)

i += 1

return outputs

The find_research_category() output is a list of subject categories in string format. We will convert them into binary values to compare them with the target subject categories. To do so, we define the parse_outputs_to_dataframe() function.

def parse_outputs_to_dataframe(outputs):

subjects = ["Computer Science", "Physics", "Mathematics", "Statistics", "Quantitative Biology", "Quantitative Finance"]

# Remove square brackets and split the subjects for each entry in outputs

parsed_data = [item.strip('[]').split(',') for item in outputs]

# Create an empty DataFrame with columns for each subject, initializing with 0s

df = pd.DataFrame(0, index=range(len(parsed_data)), columns=subjects)

# Populate the DataFrame with 1s based on the presence of each subject in each row

for i, subjects_list in enumerate(parsed_data):

for subject in subjects_list:

if subject in subjects:

df.loc[i, subject] = 1

return df

We will randomly select 100 records from the dataset, as shown in the following script. You can select more records if you want.

sampled_df = filtered_dataset.sample(n=100, random_state=42)

Multi-Class Text Classification Results with GPT-4o November Update

Let's first do multi-label classification using the GPT-4o November update.

model = "gpt-4o-2024-11-20"

outputs = find_research_category(client,

model,

sampled_df)

predictions = parse_outputs_to_dataframe(outputs)

targets = sampled_df[subjects]

# Calculate Hamming Loss

hamming = hamming_loss(targets, predictions)

print(f"Hamming Loss: {hamming}")

# Calculate Subset Accuracy (Exact Match Ratio)

subset_accuracy = accuracy_score(targets, predictions)

print(f"Subset Accuracy: {subset_accuracy}")

Output:

Hamming Loss: 0.175

Subset Accuracy: 0.35The output shows a Hamming loss of 0.175 and a subset accuracy of 35%. A lower value of Hamming loss indicated better model performance.

Multi-Class Text Classification Results with GPT-4o August Update

The following script shows the results of multi-label text classification using the GPT-4o August update.

model = "gpt-4o-2024-08-06"

outputs = find_research_category(client,

model,

sampled_df)

predictions = parse_outputs_to_dataframe(outputs)

targets = sampled_df[subjects]

# Calculate Hamming Loss

hamming = hamming_loss(targets, predictions)

print(f"Hamming Loss: {hamming}")

# Calculate Subset Accuracy (Exact Match Ratio)

subset_accuracy = accuracy_score(targets, predictions)

print(f"Subset Accuracy: {subset_accuracy}")

Output:

Hamming Loss: 0.165

Subset Accuracy: 0.37The results show that GPT-4o August update again outperforms the November update for both Hamming loss and accuracy metrics.

Conclusion

The results presented in this article show that the GPT-4o November update performs at par with the GPT-4o August update. I do not see much improvement in the text summarization task. For text classification, the November update performs better for multi-class text classification but worse for multi-label text classification. Hence, we cannot conclude that the November update is better for text classification either.

I suggest you update the prompts and see if you can get significantly better results with the GPT-4o November update.