DeepSeek-R1 is a groundbreaking family of reinforcement learning (RL)-driven AI models developed by the Chinese AI firm DeepSeek. It is designed to rival industry leaders like OpenAI and Google in complex decision-making and optimization problems.

In this article, we will benchmark the DeepSeek R1 model for text classification and summarization as we did with Qwen and LLama models in a previous article.

So, let's begin without further ado.

Importing and Installing Required Libraries

We will use a distilled version of DeepSeek R1 model from the Hugging Face Inference API. You can use larger versions of DeepSeek models via the DeepSeek API.

The following script installs the required libraries.

!pip install huggingface_hub==0.24.7

!pip install rouge-score

!pip install --upgrade openpyxl

!pip install pandas openpyxl

The script below imports the required libraries into your Python application.

from huggingface_hub import InferenceClient

import os

import pandas as pd

from rouge_score import rouge_scorer

from sklearn.metrics import accuracy_score

from collections import defaultdictCalling DeepSeek R1 Model Using Hugging Face Inference API

Calling the DeepSeek R1 model via the Hugging Face Inference API is similar to calling any text generation model. You need to create an object of the InferenceClient class and pass it the model ID.

hf_token = os.environ.get('HF_TOKEN')

#deepseek-R1-distill endpoint

#https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B

deepseek_model_client = InferenceClient(

"deepseek-ai/DeepSeek-R1-Distill-Qwen-32B",

token=hf_token

)Let's test the DeepSeek model we just imported. The following script defines the make_prediction() function, which accepts the model object, the system role, and the user query and returns the model response.

def make_prediction(model, system_role, user_query):

response = model.chat_completion(

messages=[{"role": "system", "content": system_role},

{"role": "user", "content": user_query}],

max_tokens=1000,

)

return response.choices[0].message.contentWe will ask the DeepSeek model to identify the sentiment expressed in a tweet.

system_role = "Assign positive, negative, or neutral sentiment to the movie review. Return only a single word in your response"

user_query = "I like this movie a lot"

output = make_prediction(deepseek_model_client,

system_role,

user_query)

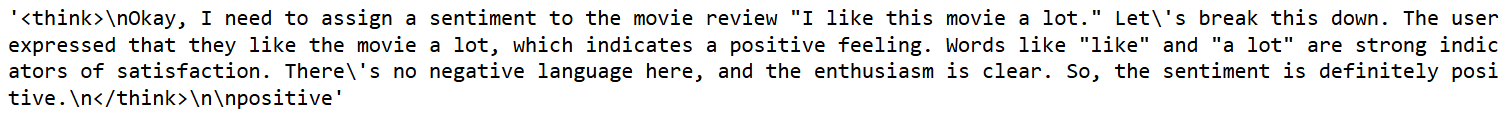

outputOutput:

The above output shows the thought process of the DeepSeek model adopted to answer the query. With the simpler models, you will only get the answer.

Since we are only interested in the sentiment, we can extract it using the following code.

last_word = output.strip().split("\n")[-1].strip()

print(last_word)Output:

positiveIn the next section, we will use the DeepSeek model for the sentiment classification of tweets in a dataset. We will compare the results with those obtained via the Qwen and Llama models in the previous article.

DeepSeek For Text Classification

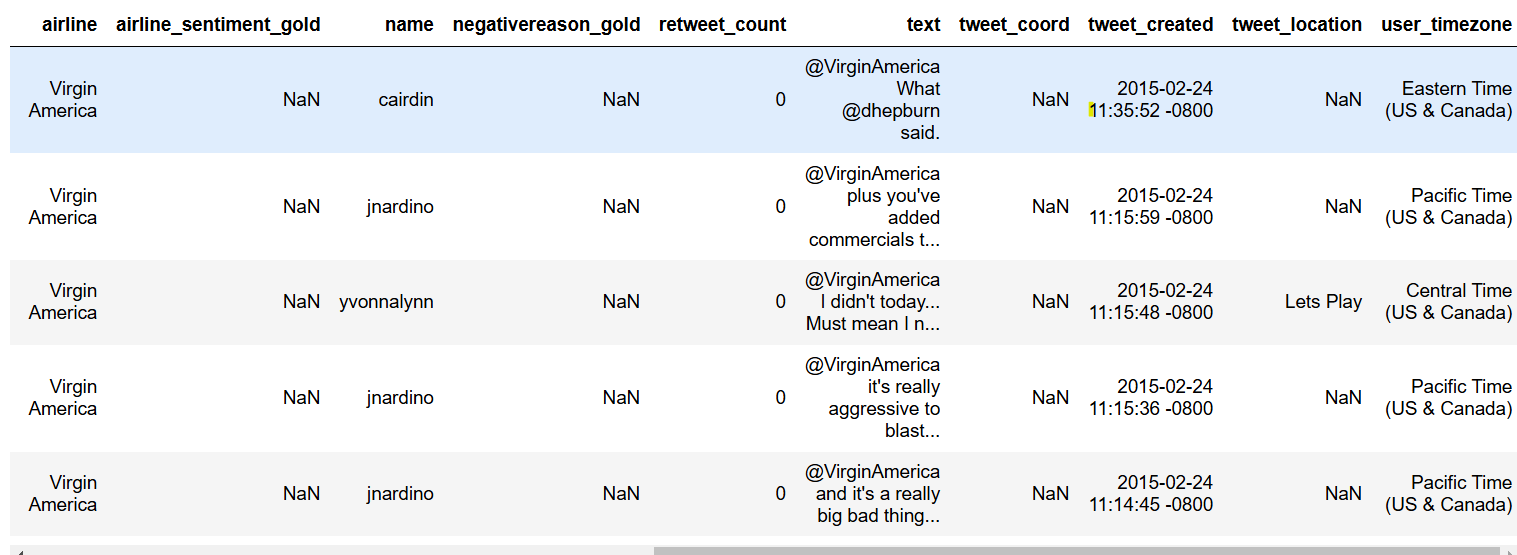

We will detect tweets' sentiment from the Twitter US Airline Sentiment Dataset. The following script imports the dataset and displays its header.

## Dataset download link

## https://www.kaggle.com/datasets/crowdflower/twitter-airline-sentiment?select=Tweets.csv

dataset = pd.read_csv(r"D:\Datasets\Tweets.csv")

dataset.head()Output:

Next, we will fetch 100 tweets, 34 of which have neutral sentiments and the remaining 66 evenly split between positive and negative sentiments, with 33 tweets each. You can use more tweets to test the DeepSeek model if you want.

# Remove rows where 'airline_sentiment' or 'text' are NaN

dataset = dataset.dropna(subset=['airline_sentiment', 'text'])

# Remove rows where 'airline_sentiment' or 'text' are empty strings

dataset = dataset[(dataset['airline_sentiment'].str.strip() != '') & (dataset['text'].str.strip() != '')]

# Filter the DataFrame for each sentiment

neutral_df = dataset[dataset['airline_sentiment'] == 'neutral']

positive_df = dataset[dataset['airline_sentiment'] == 'positive']

negative_df = dataset[dataset['airline_sentiment'] == 'negative']

# Randomly sample records from each sentiment

neutral_sample = neutral_df.sample(n=34)

positive_sample = positive_df.sample(n=33)

negative_sample = negative_df.sample(n=33)

# Concatenate the samples into one DataFrame

dataset = pd.concat([neutral_sample, positive_sample, negative_sample])

# Reset index if needed

dataset.reset_index(drop=True, inplace=True)

# print value counts

print(dataset["airline_sentiment"].value_counts())Output:

airline_sentiment

neutral 34

positive 33

negative 33

Name: count, dtype: int64We will define the predict_sentiment() function that predicts the sentiment of a single tweet.

def predict_sentiment(model, system_role, user_query):

response = model.chat_completion(

messages=[{"role": "system", "content": system_role},

{"role": "user", "content": user_query}],

max_tokens=1000,

)

output = response.choices[0].message.content

last_word = output.strip().split("\n")[-1].strip()

return last_wordNext, we will iterate through the 100 tweets in our dataset and predict their sentiment using the DeepSeek model.

tweets_list = dataset["text"].tolist()

all_sentiments = []

exceptions = 0

for i, tweet in enumerate(tweets_list, 1):

try:

print(f"Processing tweet {i}")

system_role = "You are an expert in annotating tweets with positive, negative, and neutral emotions"

user_query = (

f"What is the sentiment expressed in the following tweet about an airline? "

f"Select sentiment value from positive, negative, or neutral. "

f"Return only the sentiment value in small letters.\n\n"

f"tweet: {tweet}"

)

sentiment_value = predict_sentiment(deepseek_model_client,

system_role,

user_query)

all_sentiments.append({

'tweet_id': i,

'sentiment': sentiment_value

})

print(i, sentiment_value)

except Exception as e:

print("===================")

print("Exception occurred with Tweet:", i, "| Error:", e)

exceptions += 1

print("Total exception count:", exceptions)

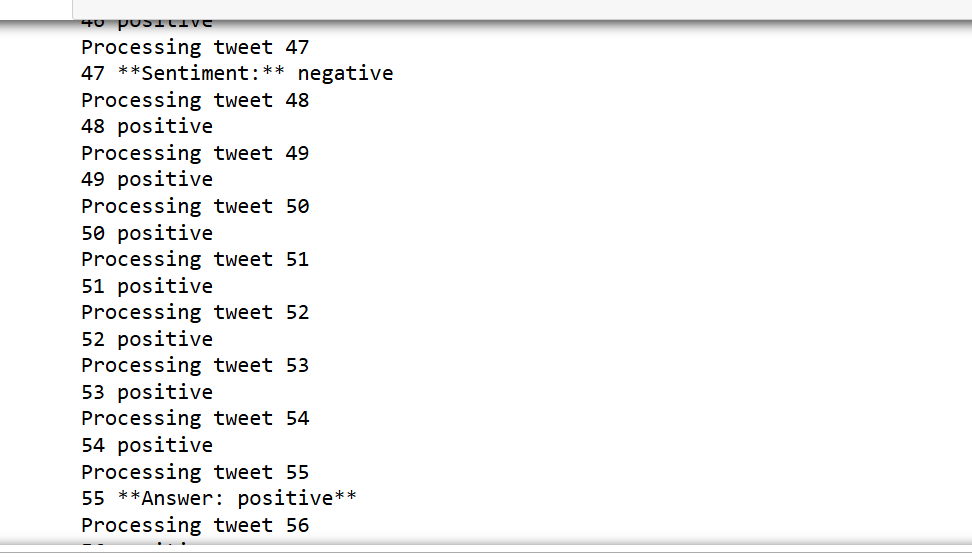

Output:

In some cases, the output contains additional text, such as Sentiment: negative. We will extract the sentiment text from the output and compare it with the target labels to calculate accuracy.

keywords = {"positive", "negative", "neutral"}

result = []

for string in all_sentiments:

for word in keywords:

if word in string["sentiment"].lower():

result.append({'sentiment':word})

results_df = pd.DataFrame(result)

accuracy = accuracy_score(results_df['sentiment'], dataset["airline_sentiment"].iloc[:len(results_df)])

print(f"Overall Accuracy: {accuracy}")

Output:

Overall Accuracy: 0.87The output shows that we obtained an accuracy of 87% with the DeepSeek model, which is an 8% improvement over the accuracy obtained via the Qwen and Llama models in the previous article.

Next, we will try the DeepSeek model for text summarization.

DeepSeek R1 for Text Summarization

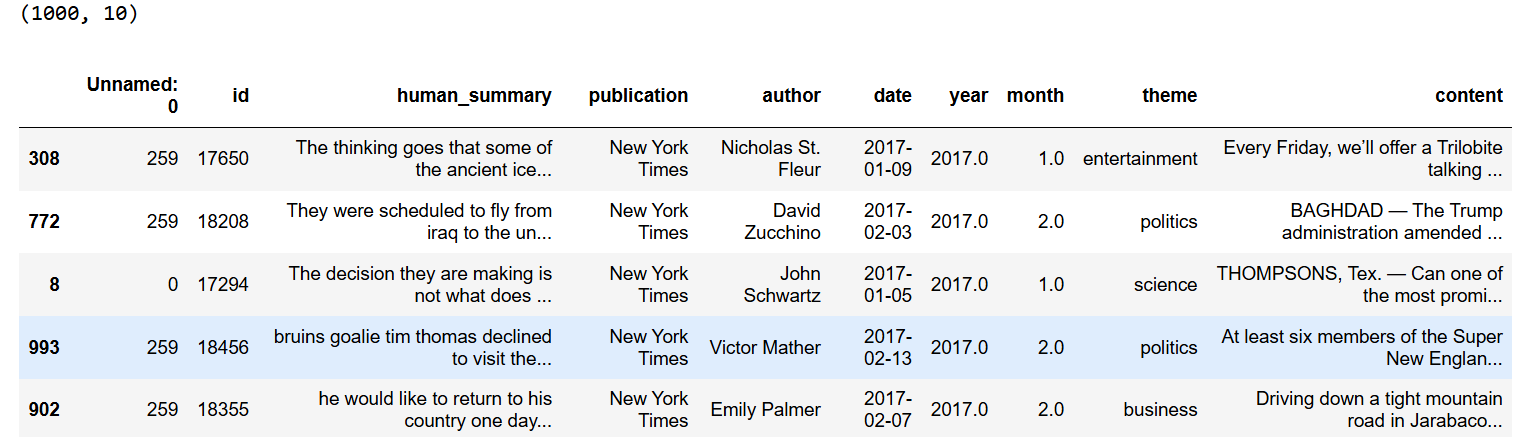

We will summarize articles in their News Article Dataset. The following script imports the dataset.

# Kaggle dataset download link

# https://github.com/reddzzz/DataScience_FP/blob/main/dataset.xlsx

dataset = pd.read_excel(r"D:\Datasets\dataset.xlsx")

dataset = dataset.sample(frac=1)

print(dataset.shape)

dataset.head()Output:

Next, we will define the generate_summary() function, which generates a summary of the news articles.

def generate_summary(model, system_role, user_query):

response = model.chat_completion(

messages=[{"role": "system", "content": system_role},

{"role": "user", "content": user_query}],

max_tokens=1500,

)

output = response.choices[0].message.content

summary = output.strip().split("\n")[-1].strip()

return summaryWe will use the ROUGE score metric to evaluate the performance of our DeepSeek model. The following script defines the calculate_rouge() function to calculate ROUGE scores for each article summary.

# Function to calculate ROUGE scores

def calculate_rouge(reference, candidate):

scorer = rouge_scorer.RougeScorer(['rouge1', 'rouge2', 'rougeL'], use_stemmer=True)

scores = scorer.score(reference, candidate)

return {key: value.fmeasure for key, value in scores.items()}Finally, we will iterate through the articles (first 20 only) in our dataset, generate the article summaries, and calculate their ROUGE scores. The output shows the average ROUGE scores for all the articles.

results = []

i = 0

for _, row in dataset[:20].iterrows():

article = row['content']

human_summary = row['human_summary']

i = i + 1

print(f"Summarizing article {i}.")

system_role = "You are an expert in creating summaries from text"

user_query = f"""Summarize the following article in 1150 characters. Do not return your thought process. Only the summary.

Your summary will be evaluated using ROUGE score. The summary should look like human created:\n\n{article}\n\nSummary:"""

generated_summary = generate_summary(deepseek_model_client,

system_role,

user_query)

rouge_scores = calculate_rouge(human_summary, generated_summary)

results.append({

'article_id': row.id,

'generated_summary': generated_summary,

'rouge1': rouge_scores['rouge1'],

'rouge2': rouge_scores['rouge2'],

'rougeL': rouge_scores['rougeL']

})

# Create a DataFrame with results

results_df = pd.DataFrame(results)

mean_values = results_df[['rouge1', 'rouge2', 'rougeL']].mean()

print(mean_values)

Output:

rouge1 0.368321

rouge2 0.102507

rougeL 0.183425

dtype: float64The output shows that the ROUGE scores obtained via the DeepSeek model are slightly lower than the Qwen model, which shows that DeepSeek's thinking abilities are still limited for longer text inputs. Try DeepSeek's larger models to see if you get better results for text summarization.