In this article, you will learn to use Google Gemini Pro, a state-of-the-art multimodal generative model, to extract information from PDF and convert it to CSV files. You will use a simple text prompt to tell Google Gemini Pro about the information you want to extract. This is a valuable skill for data analysis, reporting, and automation.

You will use Python language to call the Google Vertex AI API functions and extract information from PDF converted to JPEG images.

So, let's begin without ado.

Importing and Installing Required Libraries

I ran my code on Google Colab, where I only needed to install the Google Cloud APIs. You can install the Google Cloud API via the following script installs.

pip install --upgrade google-cloud-aiplatform

Note: You must create an account with Google Cloud Vertex AI and get your API keys before running the scripts in this tutorial. When you sign up for the Google cloud platform, you will get free credits worth $300.

The following script imports the required libraries into our application.

import base64

import glob

import csv

import os

import re

from vertexai.preview.generative_models import GenerativeModel, Part

Defining Helping Functions for Image Reading

Before using Google Gemini Pro to extract information from PDF tables, you must convert your PDF files to image formats, e.g. JPG, PNG, etc. Google Gemini Pro can only accept images as input, not PDF files. You can use any tool that can convert PDF files to JPG images, such as PDFtoJPG.

Once you have converted your PDF files to JPG images, you need to read them as bytes and encode them as base64 strings. Google Gemini Pro can only accept base64-encoded strings as input, not raw bytes. You also need to specify the MIME type of the images, which is image/jpeg since we will process JPEG images.

To simplify these tasks, you can define two helper functions: get_jpg_file_paths() and read_image().

The get_jpg_file_paths() function takes a directory as an argument and returns a list of absolute paths to all the JPG files in that directory and its subdirectories.

The read_image() function takes a list of image paths as an argument and returns a list of Part objects, which are helper classes provided by the vertexai.preview.generative_models module. Each Part object contains the base64-encoded string and the mime type of an image.

def get_jpg_file_paths(directory):

jpg_file_paths = glob.glob(os.path.join(directory, '**', '*.jpg'), recursive=True)

return [os.path.abspath(path) for path in jpg_file_paths]

def read_image(img_paths):

imgs_b64 = []

for img in img_paths:

with open(img, "rb") as f: # open the image file in binary mode

img_data = f.read() # read the image data as bytes

img_b64 = base64.b64encode(img_data) # encode the bytes as base64

img_b64 = img_b64.decode() # convert the base64 bytes to a string

img_b64 = Part.from_data(data=img_b64, mime_type="image/jpeg")

imgs_b64.append(img_b64)

return imgs_b64

Extracting Information from PDF Using Google Gemini Pro

Now that you know how to convert your PDF files to JPG images and encode them as base64 strings, you can use Google Gemini Pro to extract information from them.

To use Google Gemini Pro, you must create a GenerativeModel object and pass it the name of the model you want to use.

In this tutorial, You will use Google's latest generative model named gemini-pro-vision, a multimodal LLM capable of processing images and text.

You will also use a specific generation config, which is a set of parameters that control the behavior of the generative model.

But before the above steps, you will need to set the GOOGLE_APPLICATION_CREDENTIALS variable that stores the path to the JSON file having information about your Vertex AI Service Account and API Key.

The following script sets the environment variable, creates the model object, and defines the configuration settings.

os.environ['GOOGLE_APPLICATION_CREDENTIALS'] = r"PATH_TO_VERTEX_AI_SERVICE_ACCOUNT JSON FILE"

model = GenerativeModel("gemini-pro-vision")

config={

"max_output_tokens": 2048,

"temperature": 0,

"top_p": 1,

"top_k": 32

}

Finally, to generate a response from the Google Gemini Pro model, you need to call the generate_content() method of the GenerativeModel object. This method takes three arguments:

input: A list of Part objects that contain the data and the mime type of the input. You can provide both text and image inputs in this list.

generation_config: A dictionary containing the generation parameters you set earlier.

stream: A boolean value that indicates whether you want to receive the response as a stream or as a single object.

You can use the following code to define the generate() function that generates a response from Google Gemini Pro, given an image or a list of images, and a text prompt:

def generate(img, prompt):

input = img + [prompt]

responses = model.generate_content(

input,

generation_config= config,

stream=True,

)

full_response = ""

for response in responses:

full_response += response.text

return full_response

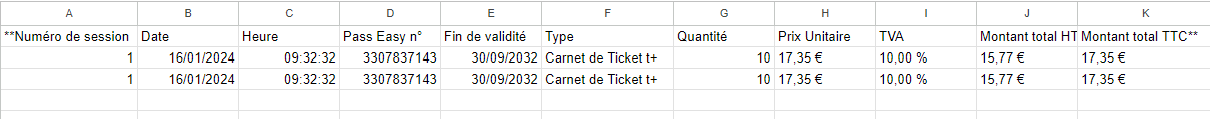

As an example, we will convert the contents of this receipt into a CSV file. The receipt is in French language and contains information about the date of purchase, number of tickets, tax information, etc. The receipt is not in tabular format, yet you will see that we will be able to convert the information in this receipt to a CSV file.

For demonstration purposes, I will use two copies of the same receipt to show you how you can extract information from multiple images.

The following calls the get_jpg_file_paths() and read_image() functions that we defined earlier to read all the images in my input directory and convert them into Part objects that the Google Gemini Pro model expects.

directory_path = r'D:\\Receipts\\'

image_paths = get_jpg_file_paths(directory_path)

imgs_b64 = read_image(image_paths)

Next, we define our text prompt to extract information from the image receipt. Your prompt engineering skills will shine here. A good prompt can make the task of LLM much easier. We will use the following prompt to extract information.

prompt = """I have the above receipts. Return a response that contains information from the receipts in a comma-separated file format where row fields are table columns,

whereas row values are column values. The output should contain (header + number of recept rows).

The first row should contain all column headers, and the remaining rows should contain all column values from two recepts one in each row.

Must use all field values in the receipt. """

Finally, we will pass the input images and the text prompt to the generates() function that returns the model response.

full_response = generate(imgs_b64, prompt)

print(full_response)

Output:

**Numéro de session,Date,Heure,Pass Easy n°,Fin de validité,Type,Quantité,Prix Unitaire,TVA,Montant total HT,Montant total TTC**

1,16/01/2024,09:32:32,3307837143,30/09/2023,Carnet de Ticket t+,10,17,35 €,10,00 %,15,77 €,17,35 €

1,16/01/2024,09:32:32,3307837143,30/09/2023,Carnet de Ticket t+,10,17,35 €,10,00 %,15,77 €,17,35 €The above output shows that the Google Gemini Pro has extracted the information we need in CSV string format.

The last step is to convert this string into a CSV file.

Converting Google Gemini Pro Response to a CSV File

To convert the response to a CSV file, we first need to split the response into lines using the string object's strip() and split() methods. This will create a list of strings, where each string is a line in the response.

Next, we will define the process_line() function that handles the unique patterns in the response, such as the currency symbols and the decimal separators.

lines = full_response.strip().split('\n')

def process_line(line):

special_patterns = re.compile(r'\d+,\d+\s[€%]')

temp_replacement = "TEMP_CURRENCY"

currency_matches = special_patterns.findall(line)

for match in currency_matches:

line = line.replace(match, temp_replacement, 1)

parts = line.split(',')

for i, part in enumerate(parts):

if temp_replacement in part:

parts[i] = currency_matches.pop(0)

return parts

The rest of the process is straightforward.

We will open a CSV file for writing using the open function with the mode argument set to w, the newline argument set to '', and the encoding argument set to utf-8. This will create a file object that you can use to write the CSV data.

Next, we will define the create csv.writer object that you can use to write the rows to the CSV file.

We will loop through all the items (CSV rows) in the lines list and write them to our CSV files.

csv_file_path = r'D:\\Receipts\\receipts.csv'

# Open the CSV file for writing

with open(csv_file_path, mode='w', newline='', encoding='utf-8') as csv_file:

writer = csv.writer(csv_file)

# Process each line in the data list

for line in lines:

processed_line = process_line(line)

writer.writerow(processed_line)

Once you execute the above script, you will see the following CSV file in your destination path, containing information from your input receipt image.

Conclusion

Extracting information from PDFs and images is a crucial task for data analysts. In this tutorial, you saw how to use the Google Gemini Pro, a state-of-the-art multimodal large language model, to extract information from a receipt image. You can use the same technique to extract any other type of information by simply using a text query.

Feel free to leave your feedback and suggestions!