OpenAI announced the GPT-4o (omni) model on May 13, 2024. The GPT-4o model, as the name suggests, can process multimodal inputs, such as text, image, and speech. As per OpenAI, GPT-4o is the state-of-the-art and best-performing large language model.

Among GPT-4o's many capabilities, I found its ability to analyze images and answer related questions highly astonishing. In this article, I demonstrate some of the tests I performed for image analysis using OpenAI GPT-4o.

Note: If you are interested in seeing how GPT-4o and Llama 3 compare for zero-shot text classification, check out my previous article.

So, let's begin without further ado.

Importing and Installing Required Libraries

The following script installs the OpenAI python library that you will use to access the OpenAI API.

pip install openaiThe script below imports the libraries required to run code in this article.

import os

import base64

from IPython.display import display, HTML

from IPython.display import Image

from openai import OpenAIGeneral Image Analysis

Let's first take an image and ask some general questions about it. The script below displays the sample image we will use for this example.

# image source: https://healthier.stanfordchildrens.org/wp-content/uploads/2021/04/Child-climbing-window-scaled.jpg

image_path = r"D:\Datasets\sofa_kid.jpg"

img = Image(filename=image_path, width=600, height=600)

img

Next, we define the encode_image64() method that accepts an image path and converts the image into base64 format. OpenAI expects images in this format.

The script below also creates the OpenAI client object we will use to call the OpenAI API.

Output:

def encode_image64(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode("utf-8")

base64_image = encode_image64(image_path)

client = OpenAI(

api_key = os.environ.get('OPENAI_API_KEY'),

)Next, we will define a method that accepts a text query and passes it to the OpenAI client's chat.competions.create() method. You need to pass the model name and the list of messages to this method.

Inside the list of messages, we specify that the system must act as a babysitter. Next, we pass it the text query along with the image that we want to analyze.

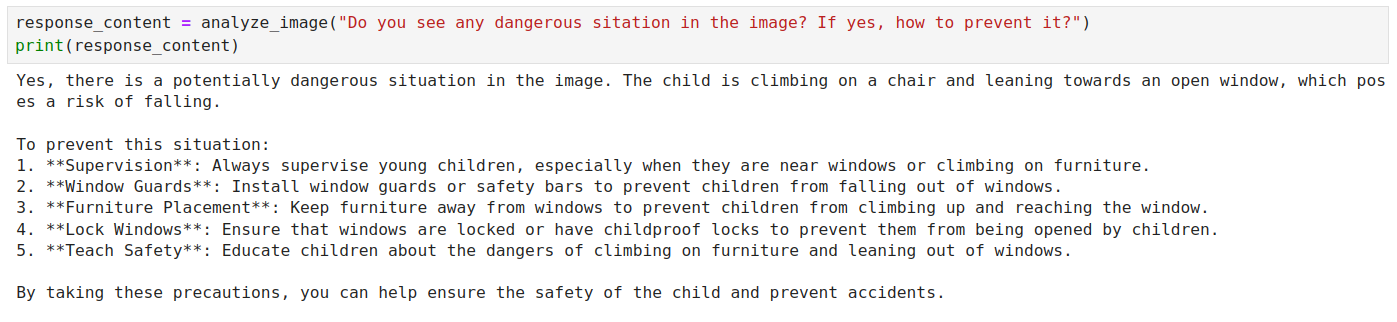

Finally, we ask a question using the analyze_image() method. The output shows that the GPT-4o model has successfully identified the potentially dangerous situation in the image and recommends prevention strategies.

def analyze_image(query):

response = client.chat.completions.create(

model= "gpt-4o",

temperature = 0,

messages=[

{"role": "system", "content": "You are a baby sitter."},

{"role": "user", "content": [

{"type": "text", "text": query},

{"type": "image_url", "image_url": {"url": f"data:image/png;base64,{base64_image}"}

}

]}

]

)

return response.choices[0].message.content

response_content = analyze_image("Do you see any dangerous sitation in the image? If yes, how to prevent it?")

print(response_content)

Output:

Graph Analysis

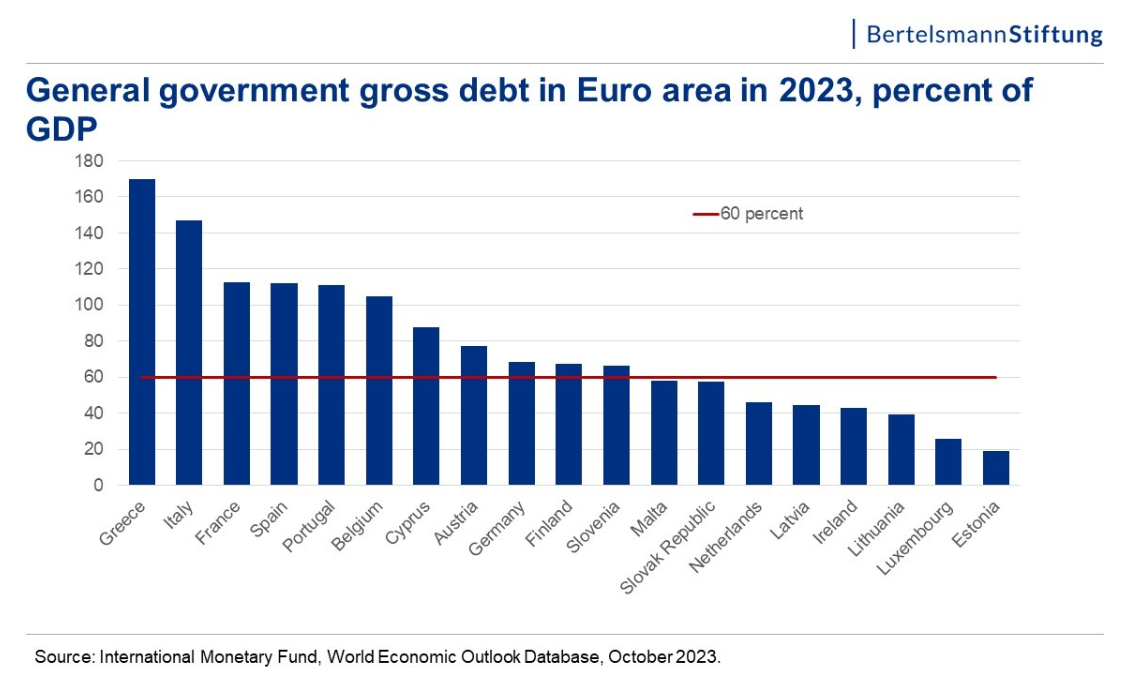

I found GPT-4o to be highly accurate for graph analysis. As an example, I asked questions about the following graph.

# image path: https://globaleurope.eu/wp-content/uploads/sites/24/2023/12/Folie2.jpg

image_path = r"D:\Datasets\Folie2.jpg"

img = Image(filename=image_path, width=800, height=800)

img

Output:

The process remains the same. We pass the graph image and the text question to the chat.completions.create() method. In the following script, I tell the model to act like a graph visualization expert and summarize the graph.

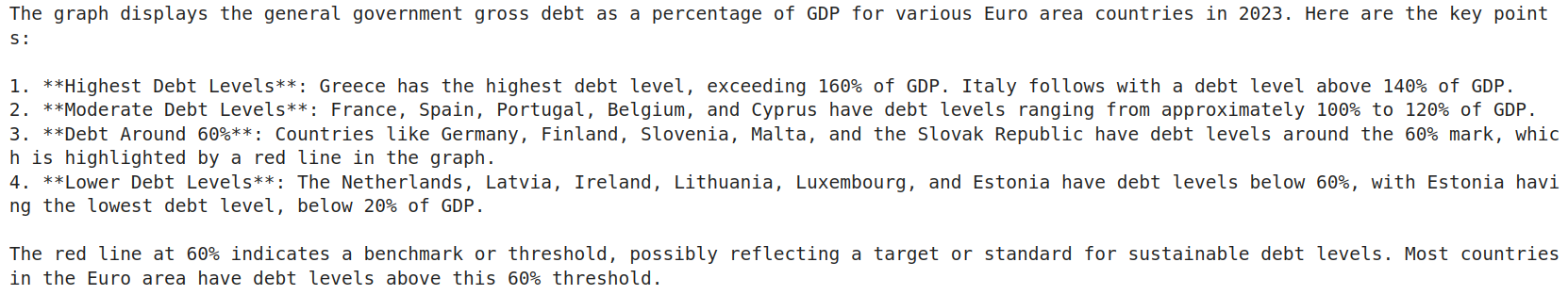

The model output is highly detailed, and the analysis's accuracy level is mind-blowing.

base64_image = encode_image64(image_path)

def analyze_graph(query):

response = client.chat.completions.create(

model= "gpt-4o",

temperature = 0,

messages=[

{"role": "system", "content": "You are a an expert graph and visualization expert"},

{"role": "user", "content": [

{"type": "text", "text": query},

{"type": "image_url", "image_url": {"url": f"data:image/png;base64,{base64_image}"}

}

]}

]

)

return response.choices[0].message.content

response_content = analyze_graph("Can you summarize the graph?")

print(response_content)Output:

The GPT-4o model can also convert graphs into structured data, such as tables, as the following script demonstrates.

response_content = analyze_graph("Can you convert the graph to table such as Country -> Debt?")

print(response_content)Output:

Image Sentiment Prediction

Another common image analysis application is predicting sentiments from a facial image. GPT-4o is also highly accurate and detailed in this regard. As an example, we will ask the model to predict the sentiment expressed in the following image.

# image path: https://www.allprodad.com/the-3-happiest-people-in-the-world/

image_path = r"D:\Datasets\happy_men.jpg"

img = Image(filename=image_path, width=800, height=800)

img

I tell the model that he is a helpful psychologist, and I want him to predict the sentiment from the input facial image. The output shows that the model can detect and explain the sentiment expressed in an image.

base64_image = encode_image64(image_path)

def predict_sentiment(query):

response = client.chat.completions.create(

model= "gpt-4o",

temperature = 0,

messages=[

{"role": "system", "content": "You are helpful psychologist."},

{"role": "user", "content": [

{"type": "text", "text": query},

{"type": "image_url", "image_url": {"url": f"data:image/png;base64,{base64_image}"}

}

]}

]

)

return response.choices[0].message.content

response_content = predict_sentiment("Can you predict facial sentiment from the input image?")

print(response_content)

Output:

The person in the image appears smiling, which generally indicates a positive sentiment such as happiness or joy.

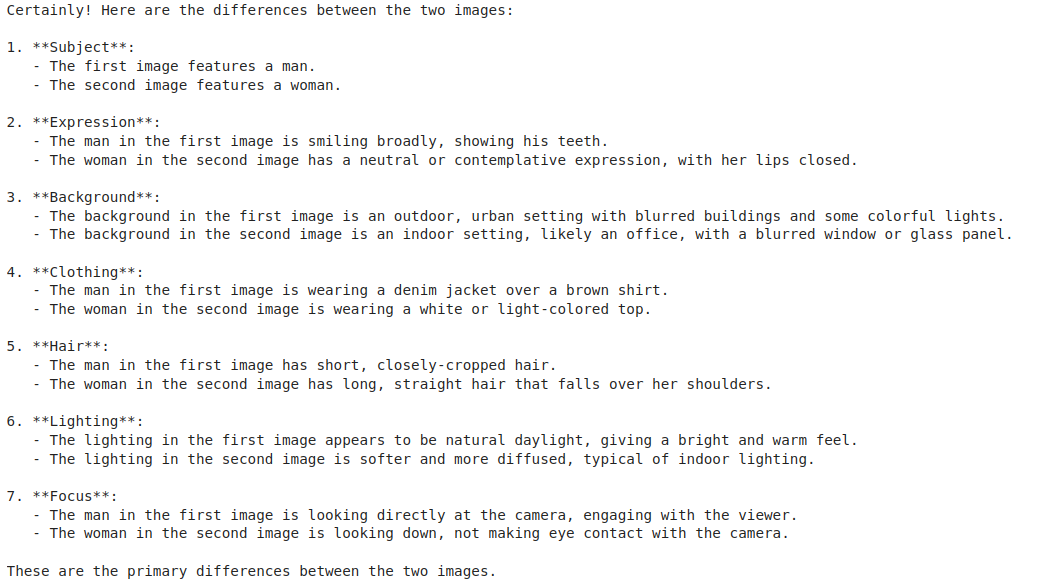

Analyzing Multiple Images

Finally, GPT-4o can process multiple images in parallel. For example, we will find the difference between the following two images.

from PIL import Image

import matplotlib.pyplot as plt

# image1_path: https://www.allprodad.com/the-3-happiest-people-in-the-world/

# image2_path: https://www.shortform.com/blog/self-care-for-grief/

image_path1 = r"D:\Datasets\happy_men.jpg"

image_path2 = r"D:\Datasets\sad_woman.jpg"

# Open the images using Pillow

img1 = Image.open(image_path1)

img2 = Image.open(image_path2)

# Create a figure to display the images side by side

fig, axes = plt.subplots(1, 2, figsize=(10, 5))

# Display the first image

axes[0].imshow(img1)

axes[0].axis('off') # Hide axes

# Display the second image

axes[1].imshow(img2)

axes[1].axis('off') # Hide axes

# Show the plot

plt.tight_layout()

plt.show()

Output:

To process multiple images, you must pass both images to the chat.completions.create() method, as shown in the following script. The image specified first is treated as the first image, and so on.

The script below asks the GPT-4o model to explain all the differences between the two images. In the output, you can see all the differences, even minor ones, between the two images.

base64_image1 = encode_image64(image_path1)

base64_image2 = encode_image64(image_path2)

def predict_sentiment(query):

response = client.chat.completions.create(

model= "gpt-4o",

temperature = 0,

messages=[

{"role": "system", "content": "You are helpful psychologist."},

{"role": "user", "content": [

{"type": "text", "text": query},

{"type": "image_url", "image_url": {"url": f"data:image/png;base64,{base64_image1}"}},

{"type": "image_url", "image_url": {"url": f"data:image/png;base64,{base64_image2}"}}

]}

]

)

return response.choices[0].message.content

response_content = predict_sentiment("Can you explain all the differences in the two images?")

print(response_content)

Output:

Conclusion

The GPT-4o model is highly accurate for image analysis tasks. From graph analysis to sentiment prediction, the model can identify and analyze even minor details in an image.

On the downside, the model can be expensive for some users. It costs $5/15 to process a million input/output text tokens, and for images, it costs $0.001275 to process 150 x 150 pixels.

However, if your budget allows, I recommend using it, as it can save you a lot of time and effort for image analysis tasks.